Dynamic IP remote access for software developers with custom-made solution (Python +Terraform +GitLab)

The Dynamic IP challenge

At Berg Software, we created a Dynamic IP solution that would help us overcome the challenges associated with remote work. When working from home (like everyone else these days), Berg Software’s developers use a VPN to get a secure connection to our on-premises infrastructure. In some cases, this is perfectly sufficient. However, when accessing the non-prod infrastructure, things get a bit more challenging:

- First, it’s challenging because of the current state of technology: hosting the non-prod infrastructure in the cloud (i.e., “IaC/infrastructure as code”) is currently the norm, not just one of the many options – so there’s no way around it.

- Then, it’s about the stakes: the non-prod machines (where development, testing, and QA happen) are not something that anyone outside the dev team should have access to (and this includes the client, indexing bots etc.). The non-prod machines are therefore cut from the internet, which makes them generally inaccessible.

- Third but not least, the VPN itself: in order to allow access to a non-prod machine, all firewalls only allow access to specific, precise IP addresses. However, an individual’s IP is not always 100% fixed: one might reset their router or have their service provider reset it “just because.” In these cases, our Ops team needs to update the IP list – which can become tedious, slow, and open to error.

We, therefore, imagined a Dynamic IP solution that should do two things:

- Transform something which we know (i.e., a FQDN/Fully Qualified Domain Name) into an IP address (needed by the firewalls to create the access rules).

- Use a Dynamic DNS service to update the FQDN to the new IP address of the developer machine.

Our Dynamic IP solution was an automated, scheduled tool that automates the refreshment of the firewall/IP list, thus eliminating Ops intervention and reducing deadtimes.

The dynamic DNS

In order to select a DNS service, we used that moment’s status quo to develop the requirements:

- It should allow us to use subdomains under our main domain name.

- It should allow the Ops team to create/remove subdomains as needed.

- It should not require developers to create their own accounts and allow the Ops team to generate the updated, personalised link for each developer into a centralised console.

- It should not require the use of any application (/any complicated process) to update FQDN.

- The generated links should have no automatic expiration date. If any of our developers has a static IP address, they should not be required to update their FQDN.

Based on these, we went with the Premium DNS package of ClouDNS: it requires our developers to bookmark a link in their regular browser, then click it/open it to update their respective FQDN entry.

Once the FQDN entry with info on the developer’s updated IP was available, we needed to update the firewall information as soon as the IP address changed. By analysing our regular tools and technologies, we got to combine three of them as follows:

- Python: translate the FQDN into an IP address, and keep a list of the last known IP addresses so we can check if anything changed. (Then, only if changed: execute the scripts to update the firewall.)

- Terraform: update the firewall on our machines.

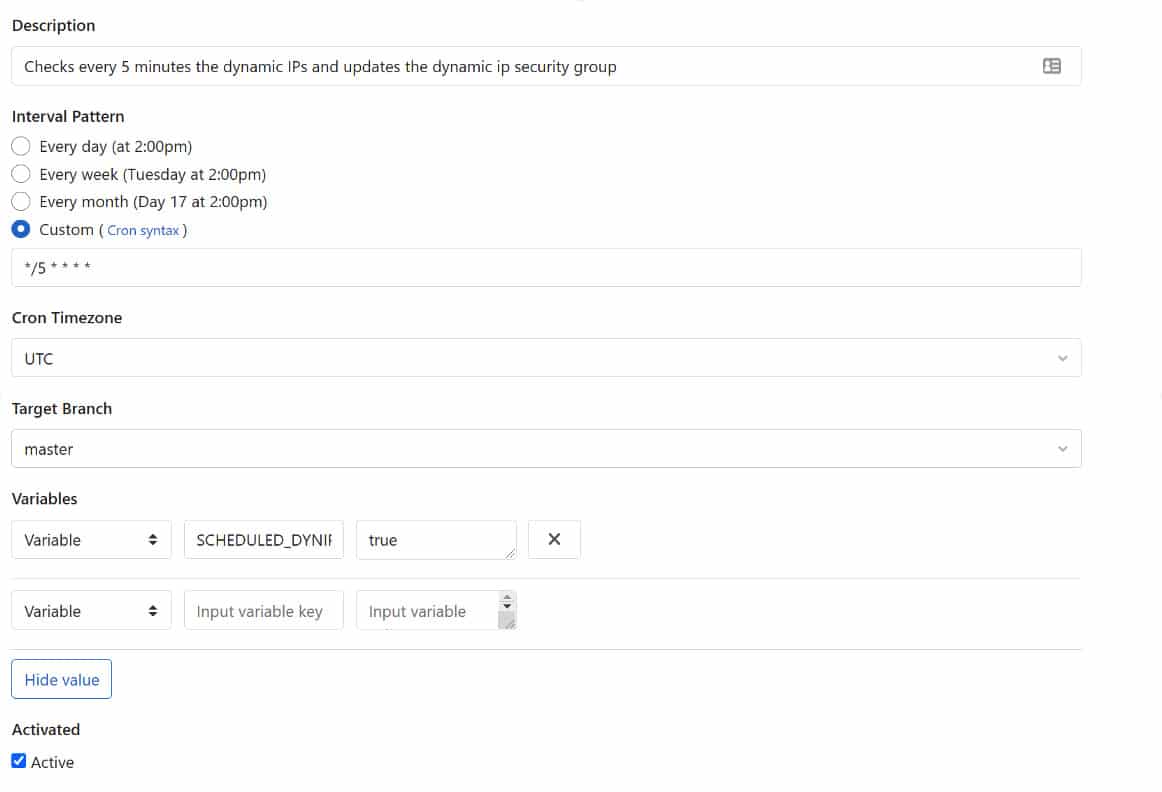

- GitLab CI/CD Scheduler: execute the workflow every few minutes.

So, let’s look into each one:

Python

We decided to use Python to solve the DNS part of the challenge because:

- we have the socket library available,

- and we can use socket.gethostbyname(hostname) to retrieve the corresponding IP address based on the FQDN.

Our script has three main goals:

- transform all FQDNs into IP addresses,

- check if any of the IP addresses do not match the previous run status, and update if changed,

- create a new terraform file based on a template containing the OpenTelekomCloud security group info that includes the updated IP address.

Here’s the script extract:

#!/usr/bin/env python3 import socket import sys import os ipfile = 'ci/dynip/oldips' hostnames = ["DeveloperFirstName.DeveloperLastName.dyn.myDomain.com"] ips="" old_ips="" i=1 for hostname in hostnames: print(hostname) ip = socket.gethostbyname(hostname) ips=ips+'{ip = "'+ip+'/32",name = "'+hostname+'"}' if i != len(hostnames): ips=ips+",\n" i=i+1 print('Discovered ips:') print(ips) ### Here tyo add check if the string ips is different to the one we have in the status file. ### If different then continue - else exit def inplace_change(filename_tmpl, filename_dest, old_string, new_string): # Safely read the input filename using 'with' with open(filename_tmpl) as fr: s = fr.read() if old_string not in s: print('"{old_string}" not found in {filename}.'.format(**locals())) return # Safely write the changed content, if found in the file with open(filename_dest, 'w') as fw: print('Changing "{old_string}" to "{new_string}" in {filename_tmpl}'.format(**locals())) s = s.replace(old_string, new_string) fw.write(s) ## inplace_change('ci/dynip/secgroups-dynip-tmpl','secgroups-dynip.tf','!!!ADDRESSES!!!',ips) def read_file(filePathOldIps): with open(filePathOldIps) as fr: s = fr.read() print('Old ips:') print('"{s}" found in "{filePathOldIps}".'.format(**locals())) return s def write_file(filePathOldIps, newIps): with open(filePathOldIps, 'w+') as fw: fw.write(newIps) print('ips written in {filename}.') if os.path.isfile(ipfile): old_ips = read_file(ipfile) if old_ips == ips: print('no ip was changed') sys.exit(666) else: print('ips were changed') write_file(ipfile,ips) inplace_change('ci/dynip/secgroups-dynip-tmpl','secgroups-dynip.tf','!!!ADDRESSES!!!',ips)

Terraform

To help with the maintenance and change management of our cloud infrastructure, we have been using infrastructure as a code solution from Terraform with the OpenTelekomCloud modules. (This is only because we are using the OTC cloud. The same result can be achieved by AWS / Azure with their respective modules.)

Here we got to the next challenges. As the script was run automatically, we needed the possibility to automatically plan, approve and apply the changes only to the specific security group. All other changes would be ignored (e.g., any changes that could be caused by a new base image, or any incompletely checked change committed in the repo).

Terraform is offering an elegant solution to this challenge, as it allows us to only execute a specific terraform file and to automatically approve the application of changes.

This can be achieved using:

./ci/app/terraform plan -target=opentelekomcloud_compute_secgroup_v2.secgrp_everithyng_dynip -out=project-plan02.dat |& tee -a ./logs/plan-dyn-$today.log ./ci/app/terraform apply -var-file="./ci/secrets/secret.tfvars" -auto-approve -target=opentelekomcloud_compute_secgroup_v2.secgrp_everithyng_dynip |& tee -a ./logs/planautopply-dyn-$today.log

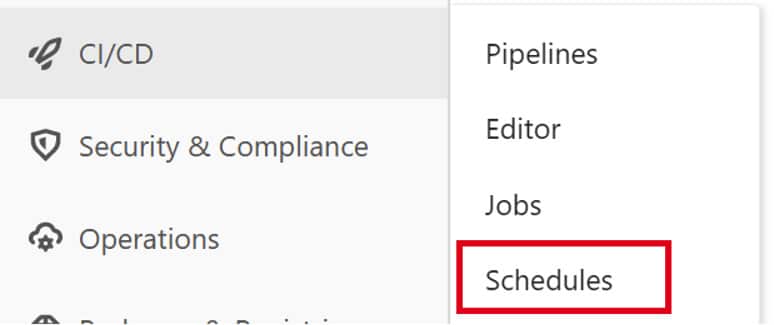

GitLab CI/CD

We use GitLab.com for source control and to run the CI/CD pipelines from our project. We, therefore, had to enhance the gitlabci script to add a new step that can be executed based on a condition. This step should be the only one that’s executed when we start the pipeline from the scheduler.

Step details:

dynip-otc:

stage: dynip

before_script:

- apk --no-cache add bash git openssl py-pip

script:

- ./ci/bin/x_dynsecurity.sh

tags:

- project

artifacts:

paths:

- .terraform/

- project-plan02.dat

- logs/

expire_in: 1 week

only:

refs:

- master

variables:

- $SCHEDULED_DYNIP =~ /^true*/

Conclusion

By using no more than three simple and accessible tools (Python, Terraform, GitLab), we were able to:

- create a Dynamic IP solution that allows our software developers uninterrupted work from home conditions,

- with virtually real-time IP updates,

- with no expansive/complicated systems,

- and no dedicated personnel for manual actions/updates.

How do *you* do it? Care to share your solution?